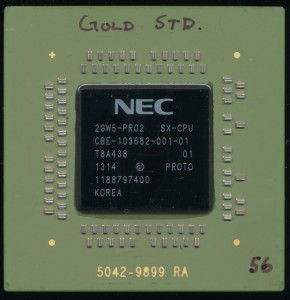

Sun SME1832ABGA PG 2.2.0 UltraSPARC RK – 2007 Sample

In 2005 Sun (now Oracle) began work on a new UltraSPARC, the Rock, or RK for short. The RK was to introduce several innovative technologies to the SPARC line and would complement the also in development (and still used) T-series. The RK was to support transactional memory, which is a way of handling memory access that more closely resembles database usage (important in the database server market). Greatly simplified, it allows the processor to hold or buffer multiple instruction results (load/stores) as a group, and then write the entire batch to memory once all had finished. The group is a transaction, and thus the result of that transaction is stored atomically, as if it were the result of a single instruction.

The RK also was designed as a 16-core processor, with 4 sets of cores forming a cluster. This is where the definition of a core becomes a source of much debate. Each 4-core cluster shared a single 32KB Instruction cache, a pair of 32KB Data caches, and 2 floating point units (one of which only handled multiplies). This type of arrangement is often called Clustered Multi-threading. Since floating point instructions are not all the common in a database system, it made sense to share the FPU resources amongst multiple ‘cores.’

The RK was designed for a 65nm process with a target frequency of 2.3GHz, while consuming a rather incredible 250W (more power than an entire PC drew on average at the time).

AMD A6-4400M – 2 ‘cores’ with shared FPU and cache – Piledriver Architecture

This should sound familiar, as its also the basis of the AMD Bulldozer (and later) cores released in 2011. AMD refers to them as Modules rather then clusters, but the principle is the same. a Module has 2 integer units, each with their own 16K data cache. a 64K instruction cache and a single floating point unit is shared between the two. The third generation (Steamroller) added a second instruction decoder to each module.

The idea of CMT, however, is not new, its roots go all the way back to the Alpha 21264 in 1996, nearly 10 years before the RK. The 21164 had 2 integer ALUs and an FPU (the FPU was technically 2 FPUs, though one only handled FMUL, while the other handled the rest of the FPU instructions) . The integer ALUs each had their own register file and address ALU and each was referred to as a cluster. Today the DEC 21264 could very well have been marketed as a dual core processor.

The SPARC RK turned out to be better on paper then in silicon. In 2009 Oracle purchased Sun and in 2010 the RK was canceled by Larry Ellison. Larry Ellison, never one to mince his words said of the RK: “This processor had two incredible virtues: It was incredibly slow and it consumed vast amounts of energy. It was so hot that they had to put about 12 inches of cooling fans on top of it to cool the processor. It was just madness to continue that project.” While the Rock (lava rock perhaps?) never made it to market, samples were made and tested, and a great deal was learned from it. Certainly experience that made its way into Oracle’s other T-Series processors.