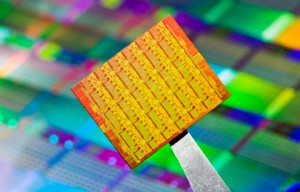

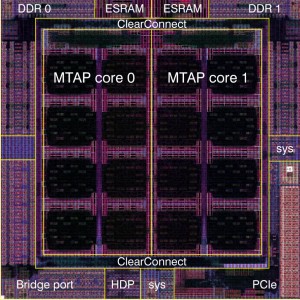

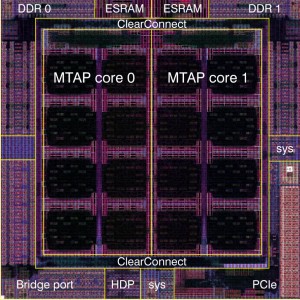

Intel 48 core Single Chip Cloud Processor

Recently two companies announced 48 core processors. Intel announced they are working on a 48 core processor idea for smart phones and tablets. They believe it will be in use within 10 years, which is an eternity in computer terms. Meanwhile Cavium, makers of MIPS based networking processors announced a new 64bit MIPS based 48-core networking processor. The Octeon III, running at 2.5GHz is expected to beginning shipping soon. Cavium already makes and ships a 32 core MIPS processor. So clearly multi-core processors are not something we need to wait 10 years for.

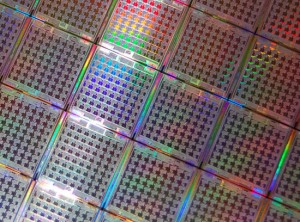

Tilera, another processor company, is ramping up production of the TILE-Gx family. This processor running at 1-1.2GHz supports from 9 to 100 cores (currently they are shipping 36 core versions). NetLogic (now owned by Broadcom) made a 32 core MIPS64 processor and Azul Systems has been shipping a 54 core processor for several years now. Adapteva is now shipping a custom 64 core processor (the Epiphany-IV). This design is expected to scale to many thousands of cores.

Why is this all important?

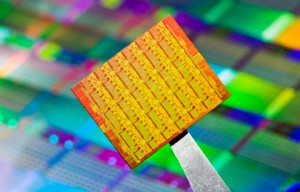

Tilera multi-core wafer

While your personal computer, which typically is running a dual, or quad core, or perhaps even a new Intel 10 core Xeon is practical for most general computing, these processors are not adequate for many jobs. Ramping up clock speed, the GHz wars, was long thought to be the solution to increasing performance in computing. Just making the pipe faster and faster, and reducing the bottlenecks that fed it new instructions (memory, disk, cache, etc) was the proposed solution to needing more performance. To a point it worked, until a wall was hit, that wall was power and thermal requirements. With increasing clock speed processors ran hotter and began drawing immense amounts of current (some processors were pulling well over 100 amps, albeit at low voltage). This was somewhat alleviated by process shrinks, but still, the performance, per watt, was decreasing.

Many computing tasks are repetitive, do the exact same thing to each of a set of data, and the results are not interdependent meaning A does not have to happen before you can do B. You can perform an operation on A, B and C, all at once and then spit out the results. This is typically true of processing network data, processing video, audio, and many other tasks. Coding and compiling methods had to be updated, allowing programs to run in many ‘threads’ which could be split amongst many cores (either real or virtual) on a processor, but once done, the performance gains were tremendous.

Clearspeed CSX700 192 cores @ 250MHz

This allows a processor to have increased performance, at a relatively low clock speed. Work loads can also be balanced, a task that does not lend itself to parallelism, can be assigns to a single core, while the other cores can be busy doing other work.

There are several main benefits to multi-cores:

Increased performance for parallel tasks: This was the original goal, split a single problem into many smaller ones, and process them all at once. That is why massively multi-core processors began in the embedded world, dealing with digital signal processing and networking.

Dynamic Performance: Dynamic clocking of multi-core processors has led to tremendous power savings. Some tasks don’t need all the performance on all the cores, so a modern multi-core processor can dynamically scale the clock speed, and voltage, of each core, as needed. If not all cores are needed, they can be put to sleep, saving power. If a non-parallel task is encountered, a single core can be dedicated to it, at an increased clock speed.

Upgradeability: If a system is designed correctly, and the code is written/compiled well, the system does not know, or care how many cores the processor has. This means that performance can, in general, be upgraded just by swapping out the physical silicon with one with more cores. This is common in larger super computers, and other systems. HP even made a custom Itanium, called the Hondo MX2 that integrated 2 Madison cores on a single Itanium module. This allowed their Superdome servers to be upgraded with nothing more then a processor swap, much cheaper then replacing the entire server.

Not all tasks are easily handled in a parallel fashion, and for this reason clock speed is still important in some applications where B cannot happen until A is complete (data dependencies). There are, and will continue to be systems where this is the focus, such as the IBM system Zec12 which runs at a stunning 5.5GHz. However, as power becomes a more and more important aspect of computing, we will continue to see an ever increasing number of cores per chip in many applications. Is there a limit? Many think not, and Intel has made a case for the use of 1000+ core processors.